반응형

Notice

Recent Posts

Recent Comments

Link

| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| 8 | 9 | 10 | 11 | 12 | 13 | 14 |

| 15 | 16 | 17 | 18 | 19 | 20 | 21 |

| 22 | 23 | 24 | 25 | 26 | 27 | 28 |

Tags

- postorder

- discrete_scatter

- vscode

- 머신러닝

- java역사

- bccard

- inorder

- html

- Keras

- mglearn

- 재귀함수

- CES 2O21 참가

- 결합전문기관

- C언어

- CES 2O21 참여

- 데이터전문기관

- paragraph

- 대이터

- web 사진

- cudnn

- web 개발

- 자료구조

- KNeighborsClassifier

- web

- broscoding

- tensorflow

- web 용어

- 웹 용어

- pycharm

- classification

Archives

- Today

- Total

bro's coding

tensorflow.AUTOTUNE 본문

반응형

케시를 사용해 속도 향상

import tensorflow as tf

AUTOTUNE = tf.data.experimental.AUTOTUNE

import IPython.display as display

from PIL import Image

import numpy as np

import matplotlib.pyplot as plt

import os

tf.__version__'2.2.0'https://python.flowdas.com/library/pathlib.html

pathlib --- 객체 지향 파일 시스템 경로 — 파이썬 설명서 주석판

pathlib --- 객체 지향 파일 시스템 경로 소스 코드: Lib/pathlib.py 이 모듈은 다른 운영 체제에 적합한 의미 체계를 가진 파일 시스템 경로를 나타내는 클래스를 제공합니다. 경로 클래스는 I/O 없이 순

python.flowdas.com

import pathlib

data_dir=tf.keras.utils.get_file(origin='https://storage.googleapis.com/download.tensorflow.org/example_images/flower_photos.tgz',

fname='flower_photos', untar=True)

data_dir = pathlib.Path(data_dir)Downloading data from https://storage.googleapis.com/download.tensorflow.org/example_images/flower_photos.tgz

228818944/228813984 [==============================] - 2s 0us/step

image_count = len(list(data_dir.glob('*/*.jpg')))

image_count3670

CLASS_NAMES = np.array([item.name for item in data_dir.glob('*') if item.name != "LICENSE.txt"])

CLASS_NAMESarray(['sunflowers', 'roses', 'tulips', 'daisy', 'dandelion'],

dtype='<U10')

roses = list(data_dir.glob('roses/*'))

for image_path in roses[:3]:

display.display(Image.open(str(image_path)))

image_generator = tf.keras.preprocessing.image.ImageDataGenerator(rescale=1./255) BATCH_SIZE = 32

IMG_HEIGHT = 224

IMG_WIDTH = 224

STEPS_PER_EPOCH = np.ceil(image_count/BATCH_SIZE)

train_data_gen = image_generator.flow_from_directory(directory=str(data_dir),

batch_size=BATCH_SIZE,

shuffle=True,

target_size=(IMG_HEIGHT, IMG_WIDTH),

classes = list(CLASS_NAMES)) Found 3670 images belonging to 5 classes.

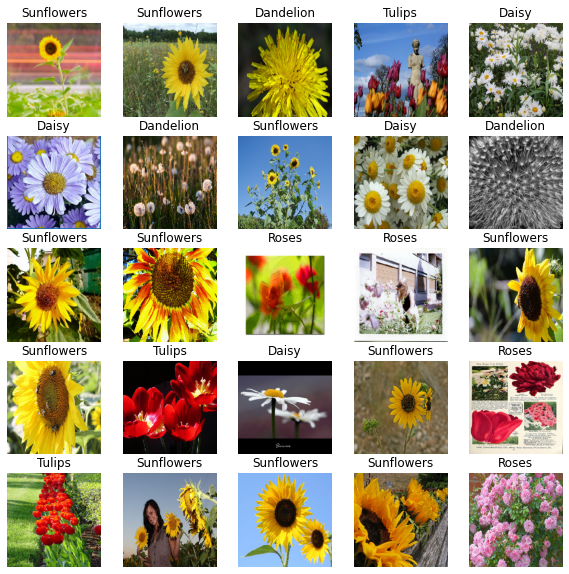

def show_batch(image_batch, label_batch):

plt.figure(figsize=(10,10))

for n in range(25):

ax = plt.subplot(5,5,n+1)

plt.imshow(image_batch[n])

plt.title(CLASS_NAMES[label_batch[n]==1][0].title())

plt.axis('off')

image_batch, label_batch = next(train_data_gen)

show_batch(image_batch, label_batch)

list_ds = tf.data.Dataset.list_files(str(data_dir/'*/*'))

for f in list_ds.take(5):

print(f.numpy())b'/root/.keras/datasets/flower_photos/roses/5892908233_6756199a43.jpg'

b'/root/.keras/datasets/flower_photos/roses/12045735155_42547ce4e9_n.jpg'

b'/root/.keras/datasets/flower_photos/sunflowers/175638423_058c07afb9.jpg'

b'/root/.keras/datasets/flower_photos/daisy/4258408909_b7cc92741c_m.jpg'

b'/root/.keras/datasets/flower_photos/tulips/8717900362_2aa508e9e5.jpg'

def get_label(file_path):

# convert the path to a list of path components

parts = tf.strings.split(file_path, os.path.sep)

# The second to last is the class-directory

return parts[-2] == CLASS_NAMESdef decode_img(img):

# convert the compressed string to a 3D uint8 tensor

img = tf.image.decode_jpeg(img, channels=3)

# Use `convert_image_dtype` to convert to floats in the [0,1] range.

img = tf.image.convert_image_dtype(img, tf.float32)

# resize the image to the desired size.

return tf.image.resize(img, [IMG_HEIGHT, IMG_WIDTH])def process_path(file_path):

label = get_label(file_path)

# load the raw data from the file as a string

img = tf.io.read_file(file_path)

img = decode_img(img)

return img, label# Set `num_parallel_calls` so multiple images are loaded/processed in parallel.

labeled_ds = list_ds.map(process_path, num_parallel_calls=AUTOTUNE)

for image, label in labeled_ds.take(1):

print("Image shape: ", image.numpy().shape)

print("Label: ", label.numpy())

Image shape: (224, 224, 3)

Label: [ True False False False False]

def prepare_for_training(ds, cache=True, shuffle_buffer_size=1000):

# This is a small dataset, only load it once, and keep it in memory.

# use `.cache(filename)` to cache preprocessing work for datasets that don't

# fit in memory.

if cache:

if isinstance(cache, str):

ds = ds.cache(cache)

else:

ds = ds.cache()

ds = ds.shuffle(buffer_size=shuffle_buffer_size)

# Repeat forever

ds = ds.repeat()

ds = ds.batch(BATCH_SIZE)

# `prefetch` lets the dataset fetch batches in the background while the model

# is training.

ds = ds.prefetch(buffer_size=AUTOTUNE)

return ds

train_ds = prepare_for_training(labeled_ds)

image_batch, label_batch = next(iter(train_ds))show_batch(image_batch.numpy(), label_batch.numpy())

import time

default_timeit_steps = 1000

def timeit(ds, steps=default_timeit_steps):

start = time.time()

it = iter(ds)

for i in range(steps):

batch = next(it)

if i%10 == 0:

print('.',end='')

print()

end = time.time()

duration = end-start

print("{} batches: {} s".format(steps, duration))

print("{:0.5f} Images/s".format(BATCH_SIZE*steps/duration))

# `keras.preprocessing`

timeit(train_data_gen)....................................................................................................

1000 batches: 93.27838158607483 s

343.05913 Images/s

# `tf.data`

timeit(train_ds)....................................................................................................

1000 batches: 65.31680083274841 s

489.91989 Images/s

uncached_ds = prepare_for_training(labeled_ds, cache=False)

timeit(uncached_ds)

....................................................................................................

1000 batches: 13.038451194763184 s

2454.27923 Images/s

filecache_ds = prepare_for_training(labeled_ds, cache="./flowers.tfcache")

timeit(filecache_ds)

....................................................................................................

1000 batches: 39.23072385787964 s

815.68722 Images/s반응형

'[AI] > python.tensorflow' 카테고리의 다른 글

| tensorflow.mnist.초급 (0) | 2020.06.12 |

|---|---|

| tensorflow.distinguish mnist (0) | 2020.06.11 |

| tensorflow.분류.mnist (0) | 2020.05.12 |

| tensorflow.mnist (0) | 2020.05.12 |

| tensorflow.분류(중간층) (0) | 2020.05.12 |

| tensorflow.분류(중간층).relu,sigmoid 비교 (0) | 2020.05.12 |

| tensorflow.분류(중간층X) (0) | 2020.05.12 |

| tensorflow.placeholder (0) | 2020.05.11 |

Comments