반응형

Notice

Recent Posts

Recent Comments

Link

| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | ||||||

| 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 | 21 | 22 |

| 23 | 24 | 25 | 26 | 27 | 28 | 29 |

| 30 |

Tags

- inorder

- java역사

- tensorflow

- paragraph

- discrete_scatter

- 자료구조

- mglearn

- 머신러닝

- html

- 결합전문기관

- CES 2O21 참가

- classification

- 재귀함수

- vscode

- web

- C언어

- 웹 용어

- web 개발

- bccard

- CES 2O21 참여

- 대이터

- KNeighborsClassifier

- pycharm

- 데이터전문기관

- web 용어

- broscoding

- postorder

- cudnn

- web 사진

- Keras

Archives

- Today

- Total

bro's coding

sklearn.svm.SVC.decision bounds 본문

반응형

import numpy as np

import matplotlib.pyplot as plt

# data set

from sklearn.datasets import load_breast_cancer

cancer=load_breast_cancer()

col1=0

col2=5

X=cancer.data[:,[col1,col2]]

y=cancer.target

from sklearn.model_selection import train_test_split

X_train,X_test,y_train,y_test=train_test_split(X,y)

X_mean=X_train.mean(axis=0)

X_std=X_train.std(axis=0)

X_train_norm=(X_train-X_mean)/X_std

X_test_norm=(X_test-X_mean)/X_std

# train and test

from sklearn.svm import SVC

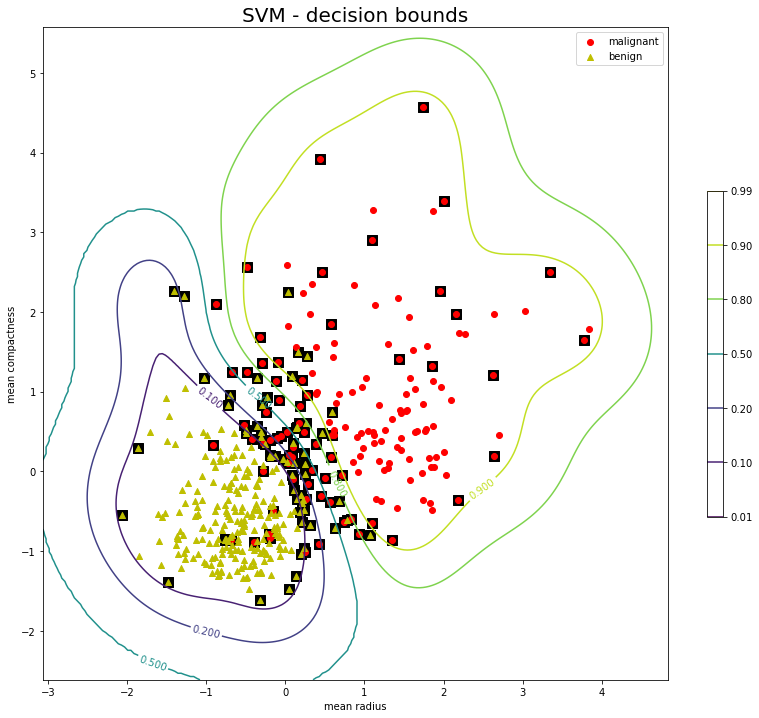

model=SVC(C=1,gamma=1,probability=True)

model.fit(X_train_norm,y_train)

train_score=model.score(X_train_norm,y_train)

test_score=model.score(X_test_norm,y_test)

display(train_score,test_score)

# visualization

scale=200

xmax=X_train_norm[:,0].max()+1

xmin=X_train_norm[:,0].min()-1

ymax=X_train_norm[:,1].max()+1

ymin=X_train_norm[:,1].min()-1

xx=np.linspace(xmin,xmax,scale)

yy=np.linspace(ymin,ymax,scale)

data1,data2=np.meshgrid(xx,yy)

X_grid=np.c_[data1.ravel(),data2.ravel()]

decision_values=model.predict_proba(X_grid)[:,0]

sv=model.support_vectors_

fig=plt.figure(figsize=[14,12])

CS=plt.contour(data1,data2,decision_values.reshape(data1.shape),levels=[0.01,0.1,0.2,0.5,0.8,0.9,0.99])

plt.clabel(CS,inline=2,fontsize=10)

plt.scatter(sv[:,0],sv[:,1],marker='s',c='k',s=100)

# 서포트 벡터 머신이니까 서포트 벡터가 있다

# 서포트 벡터 : 비용함수를 적용할때 사용한 셈플들

# 나머지 점들은 전혀 고려 하지 않는다.

# 아래 그림에서 검정색으로 포장된 셈플들이 서포트 벡터이다.

# 선을 넘어간 애들은 기본적으로 서포트 벡터가 된다.

#

plt.scatter(X_train_norm[:,0][y_train==0],X_train_norm[:,1][y_train==0],marker='o',c='r',label='malignant')

plt.scatter(X_train_norm[:,0][y_train==1],X_train_norm[:,1][y_train==1],marker='^',c='y',label='benign')

plt.legend()

plt.colorbar(CS,shrink=0.5)

plt.xlabel(cancer.feature_names[col1])

plt.ylabel(cancer.feature_names[col2])

plt.title('SVM - decision bounds',fontsize=20)

반응형

'[AI] > python.sklearn' 카테고리의 다른 글

| sklearn.linear_model.Ridge.alpha에 따른 회귀선 변화 관찰 (0) | 2020.04.19 |

|---|---|

| sklearn.linear_model.Lasso.alpha값에 따른 score변화 관찰 (0) | 2020.04.19 |

| sklearn.Compare Ridge and Rasso (0) | 2020.04.17 |

| sklearn.SVM. C and gamma 변화 관찰 (0) | 2020.04.17 |

| sklearn.svm.SVC and normalization(breast cancer) (0) | 2020.04.16 |

| sklearn.svm.SVC(kernel 기법) (0) | 2020.04.16 |

| sklearn.svm.LinearSVC.kernel 기법(타원형 데이터) (0) | 2020.04.16 |

| sklearn.kernel 기법 기초 (0) | 2020.04.16 |

Comments